SenticNet 5 - Discovering Conceptual Primitives for Sentiment Analysis by Means of Context Embeddings

Published:

This paper is published on AAAI 2018. This authors couple symbolic and sub-symbolic AI to automatically discover conceptual primitives from text and link them to commonsense concepts and named entities in a new three-level knowledge representation for sentiment analysis. In particular, they employ RNN to infer primitives by lexical substitution and use them for grounding common and commonsense knowledge by means of multi-dimensional scaling.

Table of Content

- Table of Content

- Motivation

- Learning Representations

- Linking Primitives to Concepts and Entities

- API

- Conclusion

Motivation

With the recent advances in computer vision and speech recognition brought by deep learning, the field of NLP seems to lack impressive progress. The authors argue that one of the reasons is that NLP requires more top-bottom approaches than the formers, which can gain benefits from large amount of data. Specifically, the authors think that researches need to combine statistical NLP and other disciplines such as linguistics and commonsense reasoning to understand natural language.

In this work, they develop an ensemble of symbolic and sub-symbolic approaches to encoder meanings and discover verb-noun primitives via lexical substitution. They builds a three-level knowledge representation for sentiment analysis, termed as SenticNet 5, which encodes denotative and connotative information commonly associated with real-word objects, events and people.

Learning Representations

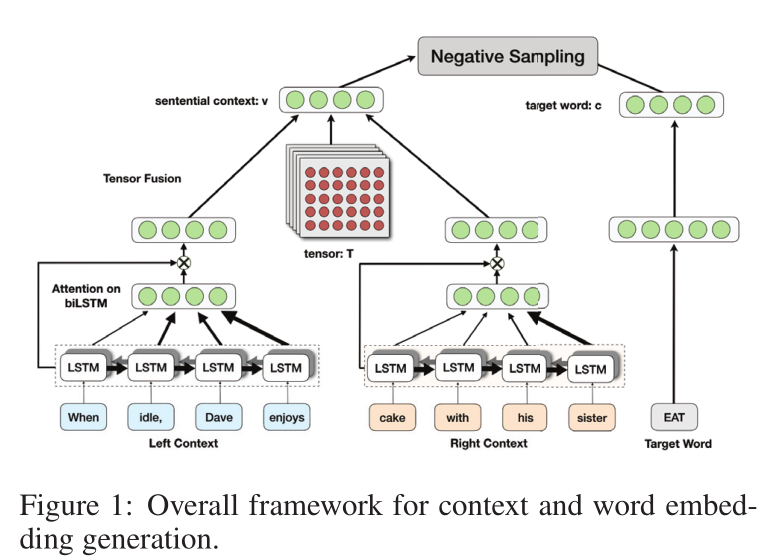

The overall framework is shown in Figure 1. Each sentence \(S=[w_1, w_2, ..., W_n]\) is divised into three segments: the left context, right context and target word, which has l, r and one words respectively.

Word Embedding and biLSTM

Each word is associated with a pretrained 300d word2vec word embedding. The left context is fed into a biLSTM sequentially to get a sequence of hidden state for each word. Then an attention is applied to these hidden states and a weighted average is computed as the final hidden state of the left context. Similar analysis is applied to the right context. To get a comprehensive feature representation of the context for a particular concept, the left and right context representations are fused by a bilinear fusion process along with a single layer neural network. The vector representation of the target word is calculated through a multilayer neural network.

Negative Sampling

To learn appropriate representation of sentential context and target word, the authors use negative sampling objective function from word2vec. Specifically, the training objective is formulated as

\[Obj = \sum_{c, v}(log(\sigma(c,v))) + \sum_{i=1}^{z}(log(\sigma(-c_i,v)))\]where \(c\) and \(v\) are valid word and context pairs. \(z\) invalid words are chosen and each \(-c_i\) refers to one invalid word with respect to a context.

Similarity Index

After learning vector representations of target word and contexts, the lexical substitution can be conducted based on the ranking of similarities between each candidate word and the target word and its contexts. This paper proposes two simple methods to calculate the distance:

\(dist(b,(c,v)) = cos(b,c) + cos(b,v)\), and

\[dist(b,(c,v)) = cos(b,c)cos(b,v)\]where \(b\) is a candidate word representation, \(c\) and \(v\) are target word and its sentential context representations respectively. These distance metrics lead to substitutions of pairs which bear the same conceptual meaning in the given context.

Linking Primitives to Concepts and Entities

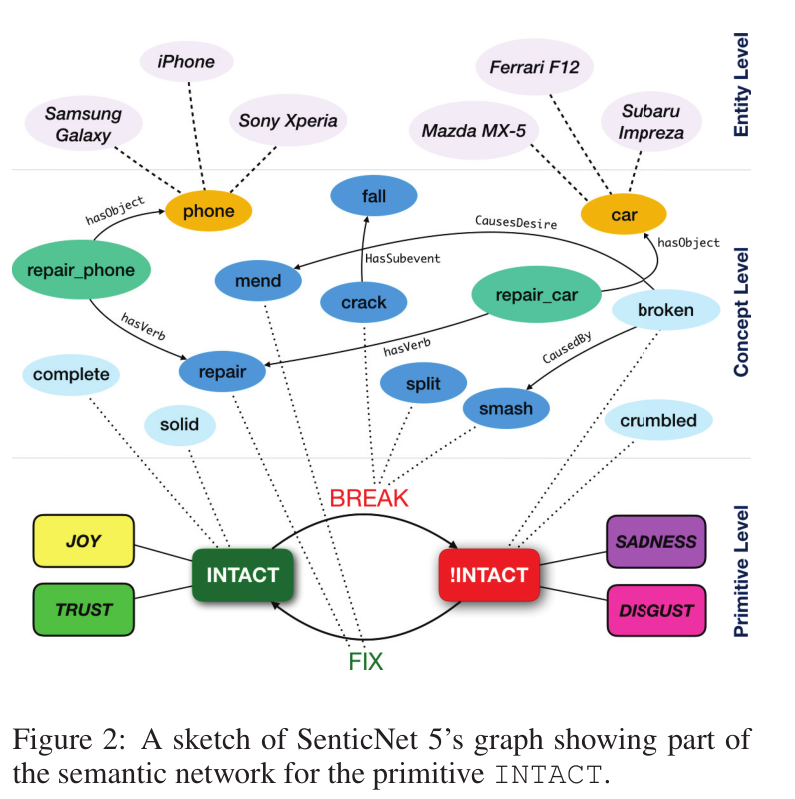

SenticNet 5 is a three-level semantic network: the Primitive Level is where basic states, actions and the interaction between them are defined by means of primitives; the Concept Level is where commonsense concepts are interconnected through semantic relationships; the Entity Level is where named entities are linked to commonsense concepts by IsA relationships. A sample graph is illustrated in Figure 2.

Affective Space

In order to automatically infer the polarity of key states, the authors use AffectiveSpace, a vector space of affective commonsense knowledge built by means of random projections. By exploiting the information sharing property of random projections, concepts with the same semantic and affective valence are likely to have similar features ,i.e., they tend to fall near each other in AffectiveSpace.

API

The authors open sourced the SenticNet project on Github and users can freely access their APIs:

http://sentic.net/api/LANGUAGE/concept/CONCEPT_NAME

where LANGUAGE is a two letter abbreviation for language. E.g., “en” refers to English. Readers may refer to http://sentic.net/api/ for more details about API usage.

Conclusion

This word uses an ensemble of symbolic and sub-symbolic AI to automatically discover conceptual primitives for sentiment analysis. This generalization process extends the coverage of SentiNet and build a new knowledge representation for better encoding semantics and sentiment.